Although it’s early in the game, I’m willing to believe that generative artificial intelligence, by whatever name (“large language models”, GPT-4, and so on), will have a significant effect on the world of work. But it’s not relevant to what I do, because for now, contracts are largely immune to a generative-AI takeover.

For one thing, mainstream contract drafting is a stew of dysfunction. In this 2022 post, I explain that if you train AI on dysfunction, the AI will only be able to replicate that dysfunction.

But today’s post looks at a different issue—how generative AI compares to using document-assembly software for creating contracts.

I was prompted to do this post by an exchange on Twitter yesterday. Someone published a poll asking which is better, creating contracts using document-assembly software such as Contract Express or manually replacing placeholders in a Word document. Someone said in reply, “This won’t age well. GPT-3 will upend this sector in months.” To which I responded, “I’m betting it won’t.”

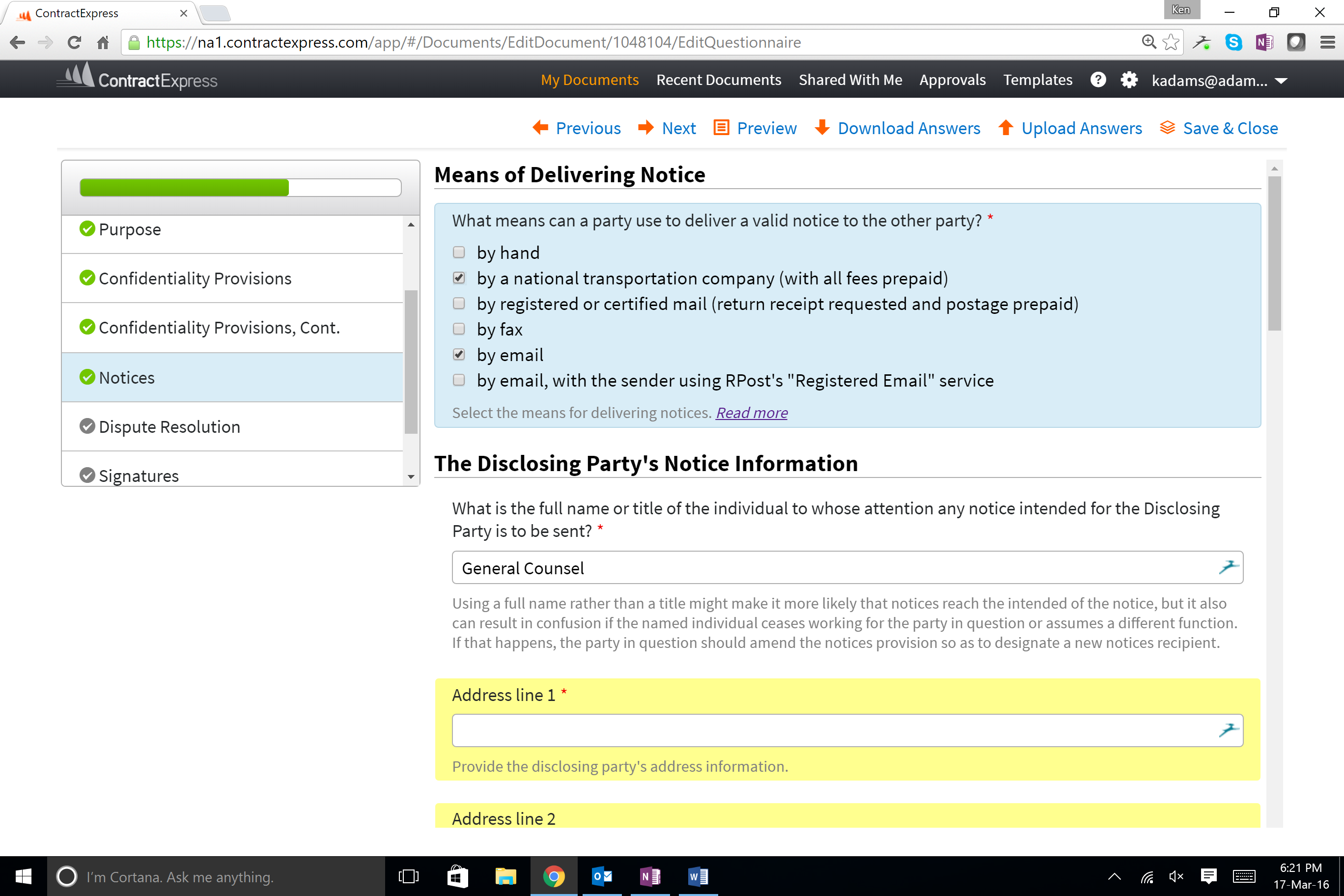

Consider how a sophisticated document-assembly template works. The user is presented with a questionnaire that asks the user to supply information or choose from among alternatives. The questionnaire offers the user guidance in tackling questions. If the questionnaire is intended for a broad group of users, it could offer extensive customization. The questionnaire for the automated confidentiality agreement I built 15 years ago using Contract Express is of that sort: depending on the extent to which you need bells and whistles, you could end up answering 70 or more questions. Presumably that’s why for years, Thomson Reuters used a screenshot from my questionnaire on their Contract Express web page. (The image above is a screenshot from that questionnaire.)

So a sophisticated document-assembly template would go a long way toward allowing users to create a contract that optimally addresses their needs, while offering control and transparency.

If you wanted to create the same kind of contract using generative AI, to approximate a document-assembly questionnaire you’d have to come up with a whole bunch of prompts. Besides being laborious, that would require extraordinary expertise. The whole point of document assembly is that you build expertise into the template, so users can access it. You’d have to do the same sort of thing with generative AI if you want to give users access to expertise.

That’s presumably why in this LinkedIn post speculating about “A simple interface that lets non-expert user generate high quality first draft of legal contract using natural language,” Danish start-up lawyer Kristian Holt assumes that one element would be “Implementation of human reinforcement feedback from legal experts with adequate skill level.” It’s not obvious how you’d achieve that with generative AI.

So document-assembly technology is great way of making contract-drafting expertise available to users. Generative AI? Not so much. So why is all the buzz about generative AI? Why aren’t we talking more about document assembly?

Because document assembly has been a notorious underachiever. That has nothing to do with shortcomings in the technology. Instead, it reflects that building a document-assembly system requires rummaging around in the entrails of contracts; in a copy-and-paste world, few people have the expertise and stomach for that. And most organizations can’t achieve the economies of scale required to make a document-assembly system cost-effective.

Doubtless plenty of people would be inclined to prostrate themselves at the altar of generative AI, but it’s not currently plausible enough for contracts. If someone were to build a document-assembly library of sophisticated templates of commercial contracts, we could forget about asking generative AI to draft contracts. (This isn’t entirely hypothetical.) But generative AI might play useful ancillary roles.

Ken:

Let’s pretend that we are two years in the future and half of firms are using generative AI of the quality we have right now to draft contracts for them. Given that, what would explain us having gotten there?

1. Ease of naive use. Using ChatGPT to generate an NDA is super-easy, at least to produce something that looks to the uneducated like a contract.

2. Indifference to quality. The contracts that most lawyers draft are pretty poor. So is one created by ChatGPT! If you’re willing to take a mediocre contract from a human , you are already willing to take a mediocre contract, which is what generative AI can produce.

3. Sensitivity to price and time. Anyone who wants a contract want it now and for free. Lawyers don’t do that, but ChatGPT does.

So if you turn out to be wrong, I think those will be the reasons.

Chris

Hi Chris. You might misunderstand what I’m saying. I’m not predicting an outcome. Instead, I’m just saying what works best.

In my post on ChatGPT, I acknowledge that if you’re satisfied with copy-and-paste dysfunction, you might well be satisfied with having generative AI do your contracts. But if that’s where we end up, that doesn’t make me wrong. It just means we’ve exchange one form of copy-and-paste dysfunction for another.

Largely agree with this post (except one point mentioned later in the doc). Given that the world is flush with templates, doc assembly tools and guided drafters, we believe that real value for Generative AI in contracts is in assisting review (both first pass summary type tasks and detailed deep dive on individual sections / clauses) and negotiations. LLMs enable the comparison of a given text (from contract being reviewed/negotiated) with your standards / checklists / playbooks / repository with a fair degree of accuracy (you have to handle hallucinations problem there), using techniques like RAG.

And, I’m extending myself here, if you frame the drafting problem as a review problem (i.e. how close is the given first draft to your standards), Gen AI can give you pretty useful hints (assuming your repository contains contracts which cover 99-100% of those choices you talk about). Its a non-trivial engineering problem but something which LLMs are suited for.

Now coming to the part I dont fully agree in the post is — “Implementation of human reinforcement feedback from legal experts with adequate skill level.” It’s not obvious how you’d achieve that with generative AI. — this is what I believe the Harveys of the world are trying to do when building custom LLMs for Lawfirms. The tool needs an intuitive UI (navigation, up/downvote, edit, etc.) so that expert lawyers can focus their attention on what the model has recommended and accept / edit / delete it.